Used Book Search – Quick & Reliable World Book Price Comparison

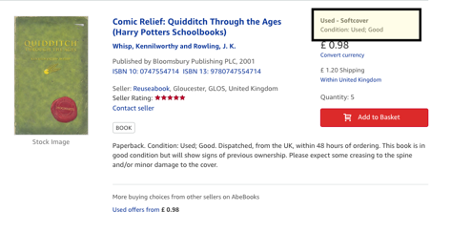

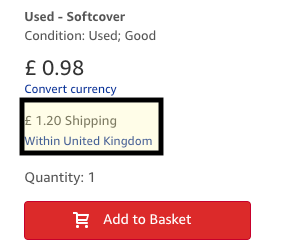

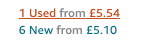

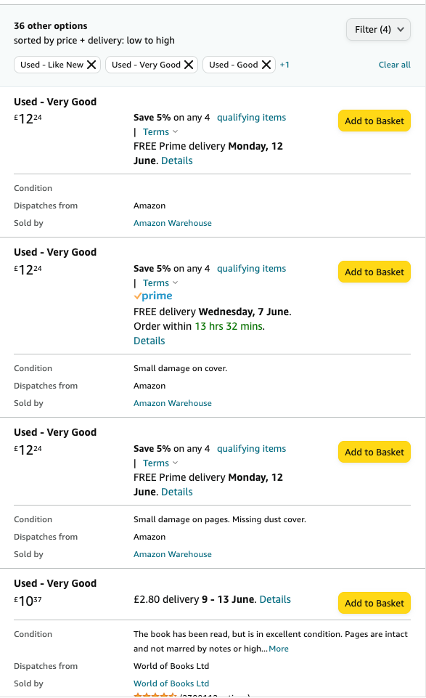

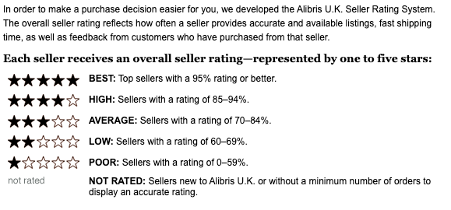

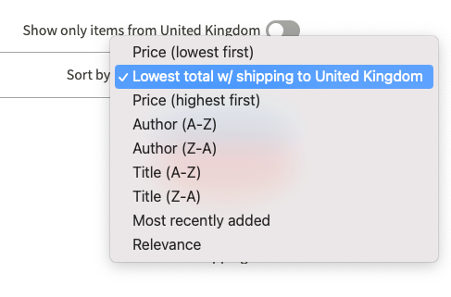

usedbooksearch.co.uk is a meta search engine for finding used books, textbooks, antiquarian, rare/valuable and out of print books . Search, find and buy second hand books online from hundreds of bookstores worldwide. Our used book search engine is linked to thousands of online booksellers via a small number of book marketplaces, such as Abebooks, Amazon Marketplace and Alibris.